Robotics: Science and Systems VIII

Estimating Human Dynamics On-the-fly Using Monocular Video For Pose Estimation

Priyanshu Agarwal, Suren Kumar, Julian Ryde, Jason Corso, Venkat KroviAbstract:

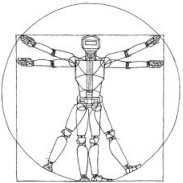

Human pose estimation using uncalibrated monocular visual inputs alone is a challenging problem for both the computer vision and robotics communities. From the robotics perspective, the challenge here is one of pose estimation of a multiply-articulated system of bodies using a single non-specialized environmental sensor (the camera) and thereby, creating low-order surrogate computational models for analysis and control. In this work, we propose a technique for estimating the lower-limb dynamics of a human solely based on captured behavior using an uncalibrated monocular video camera. We leverage our previously developed framework for human pose estimation to (i) deduce the correct sequence of temporally coherent gap-filled pose estimates, (ii) estimate physical parameters, employing a dynamics model incorporating the anthropometric constraints, and (iii) filter out the optimized gap-filled pose estimates, using an Unscented Kalman Filter (UKF) with the estimated dynamically-equivalent human dynamics model. We test the framework on videos from the publicly available DARPA Mind's Eye Year 1 corpus [8]. The combined estimation and filtering framework not only results in more accurate physically plausible pose estimates, but also provides pose estimates for frames, where the original human pose estimation framework failed to provide one.

Bibtex:

@INPROCEEDINGS{Agarwal-RSS-12,

AUTHOR = {Priyanshu Agarwal AND Suren Kumar AND Julian Ryde AND Jason Corso AND Venkat Krovi},

TITLE = {Estimating Human Dynamics On-the-fly Using Monocular Video For Pose Estimation},

BOOKTITLE = {Proceedings of Robotics: Science and Systems},

YEAR = {2012},

ADDRESS = {Sydney, Australia},

MONTH = {July},

DOI = {10.15607/RSS.2012.VIII.001}

}